Model Parameters

Large language models output the next token based on the sequence of tokens that came before it. Each generated token is selected from a "pool" of possible tokens, rather than simply selecting the most probable token (this would lead to boring and repetitive outputs).

The following samplers specify how tokens should be added and selected from the pool. Adjusting these parameters can drastically change how your model behaves.

Base Parameters #

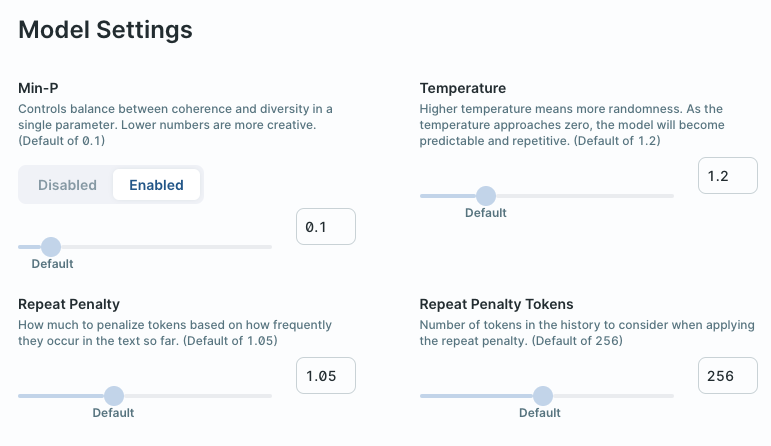

Temperature

- Default: 1.2

- Definition: Controls the "creativity" of the model by setting how likely the model is to choose a less probable token.

- Explanation: A very low temperature setting (0 - 0.2) can lead to repetition, as the model will lean towards repeating the previous sequence ("cat cat cat cat"). A very high temperature (2.0 or higher) can lead to nonsensical outputs as the next token will be unrelated to the previous sequence ("cat tree stew"). Typical values are between 0.5 ("cat climbed a tree") and 1.2 ("cat spoke eloquently"). However, when using Min-P temperature can be set much higher without causing incoherent output.

Min-P

- Default: 0.1

- Definition: The parameter multiplied by the top token probability becomes the cutoff for token probabilities in the selection pool.

- Explanation: Min-P dynamically adjusts the pool of tokens based on how likely the top token is. When the top token is very likely, the cutoff is high, and when it is less likely, lower probability tokens are let into the pool. The parameter is a proportion (between 0 and 1) of the top token probability. Increasing Min-P constricts the pool to more likely tokens and reducing it widens the pool. A Min-P of 1 would only include the top token while a Min-P of 0 would include all tokens. Min-P is better than alternatives at giving a consistent level of variety between generation steps.

Repeat Penalty

- Default: 1.05

- Definition: How heavily a repeated token is penalized.

- Explanation: To avoid unnecessary repetition, repeat penalty looks at past tokens to reduce the probability of selecting a token that has already occurred. A value of 1.0 is inactive. Typical values range between 1.0 - 1.2. Backyard AI defaults repeat penalty to be inactive as it is not typically required when using Min-P.

Repeat Penalty Tokens

- Default: 256

- Definition: The number of trailing tokens in the context to consider when applying the repeat penalty.

- Explanation: Adjust this to determine how far back repetition penalty looks in the context. Increasing this will make outputs more conservative.

Optional Parameters #

Top P

- Default: 0.9

- Definition: Reduces the pool to tokens with a high probability of appearing next (that is, probabilities adding up to the top P value or higher).

- Explanation: Top-p sampling selects the next token from a small group of likely options, rather than considering every possible token. It improves quality by only considering words that have a high chance of being correct. For example, if you set Top P to 0.9, then only the most probable tokens that add up to 90% of the probability mass will be kept in the pool.

Top K

- Default: 30

- Definition: The number of high probability tokens that are kept in the selection pool.

- Explanation: If you set Top K to 10, then only the 10 most probable tokens will be kept in the pool. If you set it to 100, then the 100 most probable tokens will be kept in the pool. This is a good way to reduce the size of the pool, but it is less dynamic than Top P.

Start Guide

Feature Guides

© 2025 Backyard AI